Models

Text completion models

In the langchain.llms package, there are multiple wrappers available for large language model APIs that perform text completion.

OpenAI

OpenAI is an AI company that offers various products, including large language models such as the GPT family, image generation products like Dall-E, and speech-to-text tools such as Whisper.

To utilise OpenAI models, the OpenAI package must first be installed.

pip install openai

In order to use the OpenAI API, an API key needs to be exported as an environment variable:

export OPENAI_API_KEY=<secret_key>

Text generation with OpenAI can be accomplished by creating a wrapper object for OpenAI and invoking it with a specific prompt:

from langchain.llms import OpenAI

llm = OpenAI()

prompt = "Where do giraffes live?"

output = llm(prompt)

print(output)

Output:

Giraffes live in the savannas and open woodlands of Africa.

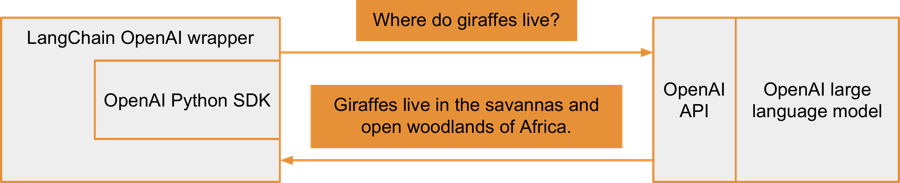

The diagram illustrates how the LangChain OpenAI wrapper is utilised to interface with OpenAI's language models:

Temperature

The temperature parameter governs the level of randomness in OpenAI's language model output. Lower temperature values (for instance, 0.0) yield more focused and deterministic responses, whereas higher values (like 0.9) generate a wider array of creative and diverse outputs. Choosing the suitable temperature parameter depends on the particular application and the intended outcome.

The default value for the temperature parameter when initialising the OpenAI wrapper is 0.7.

The temperature parameter can be passed as an argument to OpenAI's wrapper constructor:

llm = OpenAI(temperature=0)

Below are several outputs provided for different values of the temperature parameter when generating output for the prompt Where do giraffes live?.

For a temperature of 0, repeated calls to the OpenAI API return the same or very similar outputs:

Giraffes live in the savannas and open woodlands of Africa.

Giraffes live in the savannas and open woodlands of Africa.

Giraffes live in the savannas and open woodlands of Africa.

As the temperature is increased to 0.9, a greater variety of outputs are returned, which still make sense:

Giraffes live in the savannas of Sub-Saharan Africa.

Giraffes are found in the savannas and open woodlands of Africa. They are found in countries such as Botswana, Kenya, Namibia, South Africa, Uganda, and Zimbabwe.

Giraffes live in the savannas of Africa, specifically in the countries of Kenya, Tanzania, Uganda, Niger, Cameroon, and South Africa.

However, when the temperature value approaches the possible maximum of 2, the output becomes very random and no longer makes sense:

Giraffes inhabit the Savannah pans, particularly secondary tree savanna, showing densely packed patches displaying rough waving bloc Hes exposed rocky areas scatterwood clusters butt acQuartz, sand stones disperse and close content all bat wood assortment stuff barely topping that adequate permanent shallow canals courses just flow center wantby...

Model

There are several language models provided by OpenAI. When initializing the wrapper, the default model used is text-davinci-003.

The text-davinci-003 model belongs to the GPT-3.5 family and is explicitly designed to follow instructions. Another model in this family, the gpt-3.5-turbo, offers both higher performance and lower cost.

The text-ada-001 model is an affordable, less performant member of the GPT-3 family. Below is an example of how to use this model using the OpenAI wrapper:

llm = OpenAI(model_name="text-ada-001")

Hugging Face Hub

Hugging Face Hub is a platform offered by Hugging Face, a company specialising in Artificial Intelligence. This platform enables users to store and share pre-trained models and datasets. Notable large language models hosted on the platform include Dolly by Databricks and StableLM by Stability AI.

For using models stored on the Hugging Face Hub, the huggingface-hub package needs to be installed:

pip install huggingface_hub

Following this, an API key for the Hugging Face Hub API should be exported:

export HUGGINGFACEHUB_API_TOKEN=<secret key>

LangChain utilises HuggingFaceHub, a wrapper that facilitates the interaction with models hosted on the Hugging Face Hub. Each model on the hub is uniquely identified by a combination of the organisation name and the model name.

Take, for example, the model stablelm-tuned-alpha-3b that resides within the stabilityai organization, which is associated with Stability AI. This model is distinctly identified as stabilityai/stablelm-tuned-alpha-3b. This identifier serves as the repo_id argument when selecting the model.

from langchain.llms import HuggingFaceHub

repo_id = "stabilityai/stablelm-tuned-alpha-3b"

llm = HuggingFaceHub(repo_id=repo_id)

prompt = "Where do giraffes live?"

output = llm(prompt)

print(output)

Output:

You can't find a giraffe in a zoo.

Following a similar procedure, the Dolly model from Databricks can be utilised:

from langchain.llms import HuggingFaceHub

repo_id = "databricks/dolly-v2-3b"

llm = HuggingFaceHub(repo_id=repo_id)

prompt = "Where do giraffes live?"

output = llm(prompt)

print(output)

Output:

Giraffes are found in sub-Saharan Africa

Chat models

Chat models are based on large language models. Unlike text completion models, which simply take text as input and return text as output, chat models introduce the concept of chat messages exchanged between the client and the model. However, it is entirely possible to use a chat model for text completion, which means sending only a single message and receiving a response.

ChatOpenAI

OpenAI provides several chat models, such as gpt-3.5-turbo and gpt-4.

In LangChain, there are a few types of chat messages:

- System message: This sets the initial behavior of the chat model, such as being a helpful assistant that answers questions about a specific topic.

- Human message: These are messages from clients.

- AI message: These are messages from the chat model.

In interactions with the chat model, an AI-generated message is returned. Typically, all previous conversation history is sent along with the new message to provide context to the chat model.

Let's examine how to use these with LangChain:

from langchain.chat_models import ChatOpenAI

from langchain.schema import HumanMessage, SystemMessage

chat = ChatOpenAI(temperature=0)

messages = [

SystemMessage(

content="You are an assistant which answers questions about cooking."

),

HumanMessage(content="Give a name of a single vegetable."),

]

result = chat(

messages

) # AI message is returned with an answer from the chat model.

print(result)

messages.append(result) # append the result to the list of messages

messages.append(

HumanMessage(content="How can it be cooked?")

) # append also a new human message

print(chat(messages))

The conversation for the snippet above would appear as follows:

System: You are an assistant which answers questions about cooking.

Human: Give a name of a single vegetable.

AI: Broccoli.

Human: How can it be cooked?

AI: Broccoli can be cooked in many ways, including:

- Steaming: Place the broccoli florets in a steamer basket and steam for 5-7 minutes until tender.

- Roasting: Toss broccoli florets with olive oil, salt, and pepper, and roast in the oven at 400°F for 15-20 minutes until crispy and tender.

- Stir-frying: Heat oil in a wok or skillet, add broccoli florets and stir-fry for 3-5 minutes until tender-crisp.

- Boiling: Bring a pot of salted water to a boil, add broccoli florets and cook for 3-5 minutes until tender.

- Grilling: Brush broccoli florets with olive oil and grill over medium-high heat for 5-7 minutes until charred and tender.