Agents

In LangChain, agents are autonomous entities that employ a large language model as their reasoning mechanism. They offer a set of tools to the large language model, which it may utilise to execute actions such as browsing the internet or running commands on a local computer. Agents display flexibility as they accept user input, make decisions on problem-solving approaches, and execute the necessary steps until they determine that the problem has been sufficiently addressed.

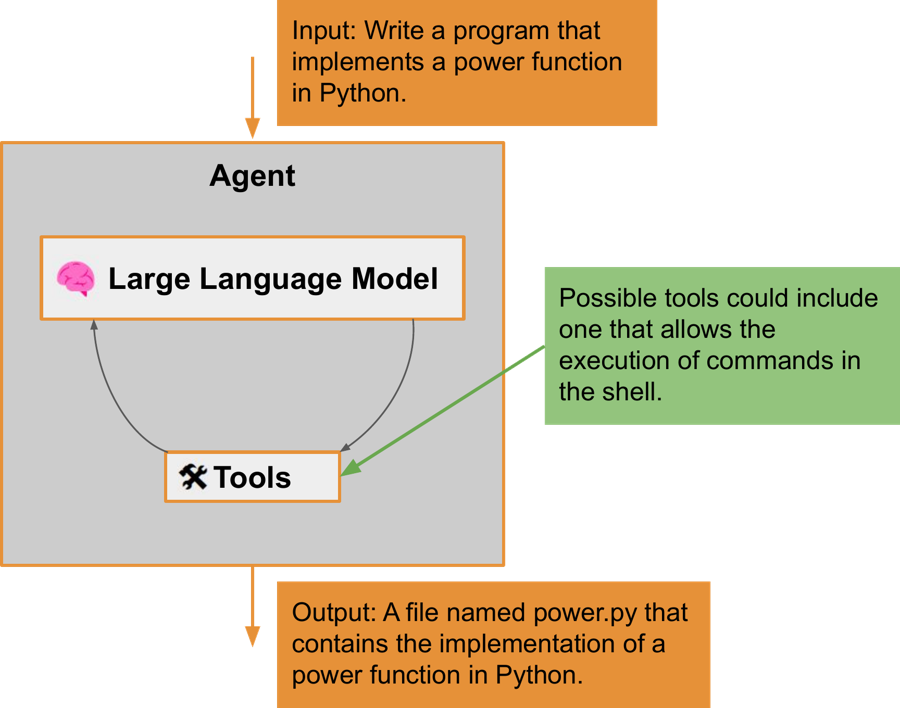

Let's examine a simple example. An agent will be tasked with writing a power function in Python. The agent will be equipped with a tool that enables it to execute commands in the shell on the local machine, thereby allowing it to write files and run the Python executable.

The input for this agent is Write a program that implements a power function in Python. The agent, using the large language model, will discern the current task and strategise on how to solve it with the available tools. Consequently, the agent may create a file containing the implementation of the power function. After accomplishing this, it will determine that the task has been finalised and will cease execution.

ReAct

ReAct (Reason+Act), which should not be confused with the frontend JavaScript library, is a method that prompts a large language model to reason about a problem based on the input and determine the next action to carry out in order to reach the final goal. When an action is performed, it may generate an output that can be used in the next iteration for generating the subsequent thought. This method was described in the paper ReAct: Synergizing Reasoning And Acting In Language Models. According to the paper, maintaining a record of performed reasoning assists the model in making better decisions regarding future actions. Additionally, performed actions can provide the model with extra information to enhance its reasoning process.

Let's examine a code example that utilises an agent to solve the problem of writing and validating an implementation of the power function in Python:

from langchain.agents import initialize_agent, AgentType

from langchain.tools import ShellTool

from langchain.chat_models import ChatOpenAI

tools = [ShellTool()]

llm = ChatOpenAI(temperature=0, model_name="gpt-4")

agent = initialize_agent(

tools, llm, agent=AgentType.CHAT_ZERO_SHOT_REACT_DESCRIPTION

)

agent.run(

"Write and validate implementation of a power function in Python to a file named power.py."

)

An agent, using a tool named ShellTool, will gain access to the shell on the local machine. The agent will be of the CHAT_ZERO_SHOT_REACT_DESCRIPTION type, indicating that it uses a chat model (such as GPT-4 in this case), applies zero-shot prompting (meaning no similar examples of previously solved problems are provided), and employs the ReAct paradigm to solve the problem.

The interaction with the large language model proceeds as follows:

1. First, the large language model is informed about the available tools. In this case, it's only the shell tool:

Answer the following questions as best you can. You have access to the following tools:

terminal: Run shell commands on this Linux machine.

2. Next, the large language model is given instructions on how to utilise these tools:

The way you use the tools is by specifying a json blob. Specifically, this json should have a

actionkey (with the name of the tool to use) and aaction_inputkey (with the input to the tool going here).

The only values that should be in the "action" field are: terminal

The $JSON_BLOB should only contain a SINGLE action, do NOT return a list of multiple actions. Here is an example of a valid $JSON_BLOB:

{

"action": $TOOL_NAME,

"action_input": $INPUT

}

3. The large language model is then guided on how to reason and plan actions using the ReAct paradigm. Additionally, the process of concluding the agent execution is explained by introducing the Final Answer keyword:

ALWAYS use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action:

$JSON_BLOB

Observation: the result of the action

... (this Thought/Action/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin! Reminder to always use the exact characters

Final Answerwhen responding.

4. The problem is then presented to the large language model:

Write and validate implementation of a power function in Python to a file named power.py.

5. Upon receiving the problem, the large language model commences its work. Initially, it decides to write the implementation of the power function to a local file by invoking the echo command in the shell. This action doesn't yield any output or observation:

Thought: I will write the power function in Python and save it to a file named power.py. Then, I will validate the implementation by running a few test cases.

Action:

{

"action": "terminal",

"action_input": "echo 'def power(base, exponent):\\n

return base ** exponent' > power.py"

}Observation:

6. The next iteration focuses on validating the implemented code. A few test cases for the power function are run and their output is verified:

Thought: Now that I have created the power.py file, I will validate the implementation by running a few test cases.

Action:

{

"action": "terminal",

"action_input": "echo 'import power\\n\\n

print(power.power(2, 3))\\n

print(power.power(5, 0))\\n

print(power.power(-3, 2))\\n

print(power.power(10, -2))' > test_power.py && python3 test_power.py"

}

Observation:

8

1

9

0.01

7. After the code validation, the large language model determines that it has completed its task and declares this by delivering the Final Answer statement.:

Thought: I now know the final answer

Final Answer: The power function has been implemented and validated in the power.py file. The test cases have produced the expected results: 8, 1, 9, and 0.01.

The final outcome of this execution is the implementation of the power function in Python, stored in the file named power.py.